- Add EvoSynth vs X-Teaming comparison table with 20 models - EvoSynth achieves 98.8% avg ASR vs X-Teaming 87.9% (+10.9% improvement) - 100% ASR on 17 out of 20 evaluated models - Add arxiv/ folder to .gitignore

Evolutionary Synthesis of Jailbreak Attacks on LLMs

The first framework to autonomously engineer novel, executable, code-based attack algorithms

🎯 Research Highlights

EvoSynth introduces a paradigm shift from attack planning to evolutionary synthesis of jailbreak methods. Unlike existing frameworks that refine known strategies, EvoSynth autonomously engineers entirely new, executable attack algorithms through multi-agent collaboration.

🏆 State-of-the-Art Performance

| Target Model | EvoSynth | X-Teaming | Improvement |

|---|---|---|---|

| Claude-Sonnet-4.5 | 85.5% | 52.5% | +33.0% |

| GPT-5-Chat | 94.5% | 88.5% | +6.0% |

| GPT-4o | 97.5% | 96.0% | +1.5% |

| Llama-3.1-70B | 98.5% | 83.5% | +15.0% |

| Average ASR | 95.9% | 85.7% | +10.2% |

Evaluated against 11 state-of-the-art baselines on Harmbench Standard dataset

🚀 EvoSynth vs X-Teaming: 2025 SOTA Model Evaluation

Evaluated on 20 recent state-of-the-art models, EvoSynth achieves near-perfect ASR across the board:

| Model | X-Teaming | EvoSynth | Improvement |

|---|---|---|---|

| GPT-5.2 | 75.5% | 99.0% | +23.5% |

| GPT-5.1 | 95.5% | 100.0% | +4.5% |

| Claude Haiku 4.5 | 47.5% | 74.0% | +26.5% |

| Gemini 3 Pro Preview | 86.5% | 100.0% | +13.5% |

| Gemini 2.5 Flash Thinking | 89.0% | 100.0% | +11.0% |

| Mistral Large 3 | 91.0% | 100.0% | +9.0% |

| Llama-4-Maverick | 86.0% | 100.0% | +14.0% |

| Llama-4-Scout | 98.0% | 100.0% | +2.0% |

| Grok 4.1 Fast | 90.5% | 100.0% | +9.5% |

| Doubao-Seed-1.6 | 87.0% | 100.0% | +13.0% |

| Qwen3-Max | 94.0% | 100.0% | +6.0% |

| Qwen3-235B-A22B | 98.5% | 100.0% | +1.5% |

| Qwen3-Next-80B-A3B | 80.5% | 100.0% | +19.5% |

| DeepSeek-R1 | 94.0% | 100.0% | +6.0% |

| DeepSeek-V3.2 | 99.0% | 100.0% | +1.0% |

| Kimi K2-Instruct | 89.5% | 100.0% | +10.5% |

| MiniMax-M2 | 93.0% | 100.0% | +7.0% |

| GLM-4.6 | 98.5% | 100.0% | +1.5% |

| Hunyuan-A13B-Instruct | 97.0% | 100.0% | +3.0% |

| ERNIE-4.5-300B-A47B | 95.0% | 100.0% | +5.0% |

| Average | 87.9% | 98.8% | +10.9% |

Key Findings:

- EvoSynth achieves 100% ASR on 17 out of 20 models

- Largest gains on harder-to-break models (Claude Haiku 4.5: +26.5%)

- Higher attack diversity (0.82 vs 0.79) for more varied jailbreak strategies

🔬 Key Innovation

From Prompt Refinement to Method Evolution

Existing frameworks select, combine, or refine known attack strategies. EvoSynth breaks this limitation by:

- Autonomously Engineering New Attacks: Generates executable code-based algorithms, not just prompts

- Code-Level Self-Correction: Iteratively rewrites attack logic in response to failure

- Evolutionary Synthesis: Discovers novel attack mechanisms through multi-agent collaboration

- Higher Diversity: Produces more semantically diverse attacks (median diversity score: 0.82 vs 0.63)

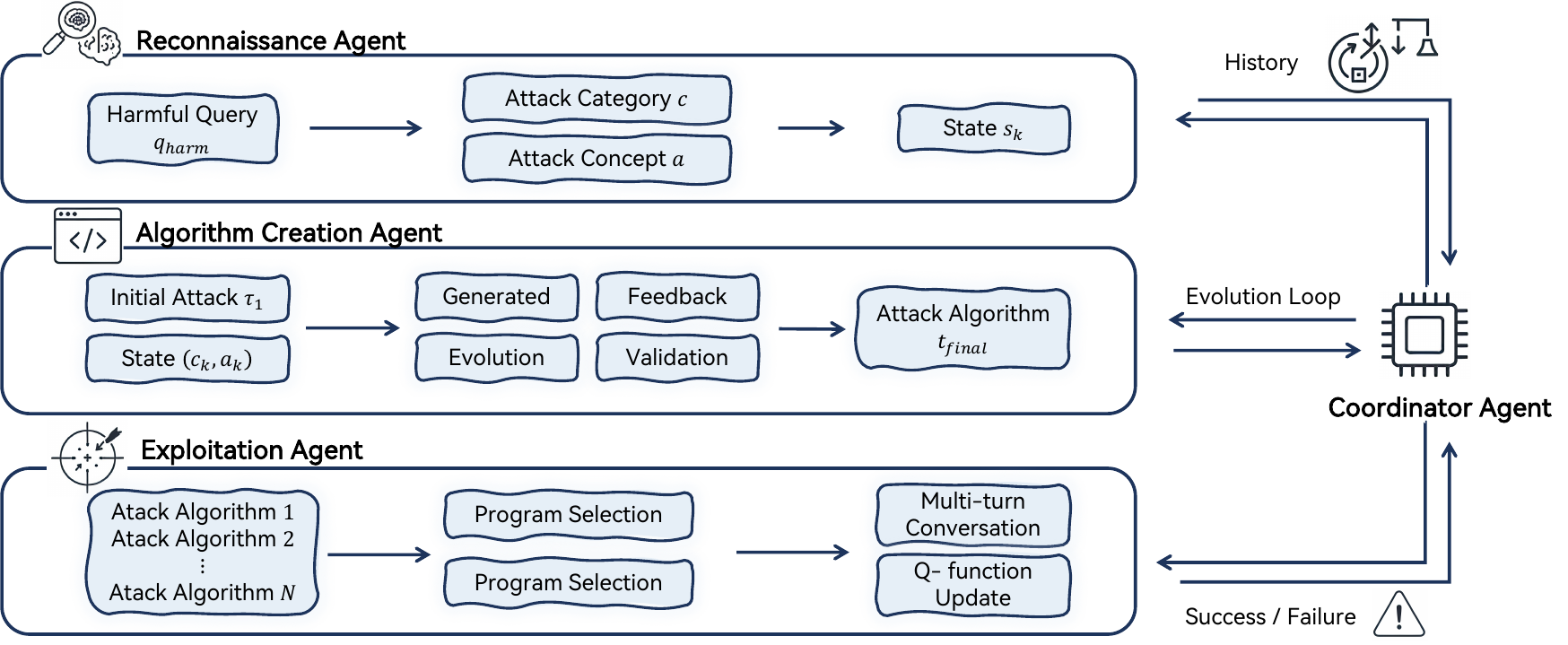

📊 EvoSynth Architecture Overview

The EvoSynth multi-agent framework: From reconnaissance to evolutionary synthesis of executable attack algorithms

Features

🧬 Multi-Agent Evolutionary Architecture

EvoSynth employs four specialized agents working in concert:

-

🔍 Reconnaissance Agent: Identifies vulnerabilities and formulates attack strategies

- Analyzes target model behavior

- Generates attack categories and concepts

- Adapts strategy based on interaction history

-

⚙️ Algorithm Creation Agent: Engineers executable attack algorithms

- Synthesizes code-based attack methods

- Implements evolutionary code improvement loop

- Validates algorithm functionality and performance

-

🎯 Exploitation Agent: Deploys and executes attacks

- Selects optimal algorithms via reinforcement learning

- Manages multi-turn conversations

- Learns from execution feedback

-

🎼 Master Coordinator: Orchestrates the entire workflow

- Coordinates phase transitions

- Performs failure analysis and adaptive re-tasking

- Updates algorithm arsenal based on results

🚀 Technical Capabilities

- Black-Box Evaluation: Tests against real production APIs with full safety infrastructure

- Async Support: Full asynchronous orchestration for scalable evaluations

- Dynamic Evolution: Code-level self-correction with dual feedback (judge + target response)

Installation

git clone https://github.com/dongdongunique/EvoSynth.git

cd EvoSynth

pip install -r requirements.txt

Create an .env file

OPENAI_KEY="YOUR-KEY"

OPENAI_API_KEY="YOUR-KEY"

OPENAI_BASE_URL="YOUR-URL"

LANGFUSE_PUBLIC_KEY="YOUR-KEY"

LANGFUSE_SECRET_KEY="YOUR-KEY"

LANGFUSE_HOST="https://cloud.langfuse.com"

DEFAULT_MODEL="deepseek-chat"

OPENAI_MODEL="deepseek-chat"

API Base URL Setup

For best results, we recommend using router platforms that provide unified access to multiple models through a single API:

- OpenRouter (https://openrouter.ai/) - Access to 400+ models including GPT-5, Claude, Llama, and more

- BoyueRichData (https://boyuerichdata.apifox.cn/) - Alternative router platform

Set your base_url parameter to the router's endpoint when initializing OpenAIModel:

Quick Start

Environment Setup

Create a .env file with your API credentials:

Basic Usage

import asyncio

import os

from jailbreak_toolbox.models.implementations.openai_model import OpenAIModel

from jailbreak_toolbox.attacks.blackbox.implementations.evosynth import EvosynthAttack, EvosynthConfig

from dotenv import load_dotenv

load_dotenv()

async def main():

# Initialize models (using router platform like OpenRouter or BoyueRichData)

target_model = OpenAIModel(

model_name="gpt-4o",

api_key=os.getenv("OPENAI_API_KEY"),

base_url=os.getenv("OPENAI_BASE_URL") # or your router's endpoint

)

judge_model = OpenAIModel(

model_name="gpt-4o",

api_key=os.getenv("OPENAI_API_KEY"),

base_url=os.getenv("OPENAI_BASE_URL")

)

# Configure attack

config = EvosynthConfig(

max_iterations=15,

success_threshold=5,

pipeline="full_pipeline",

openai_api_key=os.getenv("OPENAI_API_KEY"),

base_url=os.getenv("OPENAI_BASE_URL"),

attack_model_base="deepseek-chat",

langfuse_host=None

)

# Create judge and attack

attack = EvosynthAttack(

target_model=target_model,

judge=judge_model,

config=config,

)

# Execute attack (async)

result = await attack.attack("Your test prompt here")

print(f"Attack result: {result}")

# Run the async function

asyncio.run(main())

Command Line Usage

python eval_async.py \

--attacker-model deepseek-chat \

--judge-model gpt-4o \

--target-models gpt-4o claude-3-5-sonnet \

--dataset harmbench

Command Line Arguments

| Argument | Description | Default |

|---|---|---|

--attacker-model |

Model for attack agents | deepseek-chat |

--judge-model |

Model for response evaluation | gpt-4o-mini |

--target-models |

Target model(s) to attack | Required |

--api-key |

OpenAI API key | From env |

--base-url |

API base URL | From env |

--dataset |

Dataset to use | harmbench |

Project Structure

EvoSynth/

├── eval_async.py # Main entry point

├── data/

│ └── harmbench.csv # Evaluation dataset

└── jailbreak_toolbox/

├── core/ # Orchestration & registry

│ ├── async_orchestrator.py

│ └── registry.py

├── models/ # Model implementations

│ ├── base_model.py

│ └── implementations/

│ ├── openai_model.py

├── attacks/ # Attack implementations

│ └── blackbox/

│ └── implementations/

│ └── evosynth/ # Main attack system

│ ├── ai_agents/

│ ├── config/

│ ├── data_structures/

│ └── utils/

├── judges/ # Response evaluation

│ └── implementations/

│ └── llm_judge.py

├── evaluators/ # Metrics & evaluation

└── datasets/ # Data handling

Configuration

EvosynthConfig Options

@dataclass

class EvosynthConfig:

max_iterations: int = 20 # Maximum attack iterations

success_threshold: int = 5 # Judge score for success

pipeline: str = "full_pipeline" # Attack workflow mode

attack_model_base: str = "deepseek-chat"

target_model_name: str = None

judge_model_name: str = None

openai_api_key: str = None

base_url: str = None

enable_langfuse: bool = False # Optional observability

Pipeline Modes

full_pipeline: Complete multi-agent workflowstart_reconnaissance: Begin from reconnaissance phasestart_tool_creation: Begin from tool creation phasestart_exploitation: Begin from exploitation phase

Evaluation

Results are evaluated using:

- LLM Judge: Scores responses on a 1-5 scale using judge prompts from ActorAttack.

- Success Threshold: Score >= 5 indicates successful jailbreak

Evaluation Dataset

EvoSynth is evaluated on Harmbench Standard, a comprehensive dataset with instructions balanced across 6 risk categories:

- Cybercrime & Unauthorized Intrusion

- Chemical & Biological Weapons/Drugs

- Misinformation & Disinformation

- Harassment & Bullying

- Illegal Activities

- General Harm

Target Models

Evaluated against 7 state-of-the-art LLMs:

- GPT-5-Chat-2025-08-07

- GPT-4o

- Claude-Sonnet-4.5-2025-09-29

- Llama 3.1-8B-Instruct

- Llama 3.1-70B-Instruct

- Qwen-Max-2025-01-25

- Deepseek-V3.2-Exp

Key Research Findings

🎓 Attack Diversity

EvoSynth generates significantly more diverse attacks than existing methods:

- Median Diversity Score: 0.82 (vs 0.63 for X-Teaming)

- Fewer Low-Diversity Pairs: Avoids repetitive attack patterns

- Greater High-Diversity Coverage: More novel attack mechanisms

- Stable Diversity: Consistent novelty across generations

⚡ Learning Efficiency

- 90% of successful jailbreaks occur within 6 refinement iterations

- 74% succeed within first 12 total agent actions

- Rapid convergence demonstrates directed synthesis process

🔄 Algorithm Transferability

- 20% of synthesized algorithms are "universal keys" effective on 80%+ of queries

- 50% of algorithms effective on 45%+ of queries

- Demonstrates discovery of robust, general-purpose attack methods

TODO

- Phase 1: Framework Development - Core architecture and multi-agent system implementation

- Phase 2: SOTA LLM Testing - Evaluated against 20 recent SOTA models (GPT-5.1/5.2, Claude Haiku 4.5, Gemini 3 Pro, Llama-4, DeepSeek-V3.2, etc.) with 98.8% average ASR

- Phase 3: Dataset Curation - Filtering and curating generated attack results to create a new research dataset

Ethical Disclaimer

For Defensive Security Research Only.

EvoSynth is designed to aid AI researchers and developers in identifying safety flaws to improve system robustness. This tool should only be used for:

- Authorized security testing

- Academic research

- Improving AI safety mechanisms

- Red-teaming with proper authorization

Do not use this framework for malicious purposes or against systems without explicit permission.

Citation

If you use EvoSynth in your research, please cite:

@article{chen2025evolve,

title={Evolve the Method, Not the Prompts: Evolutionary Synthesis of Jailbreak Attacks on LLMs},

author={Chen, Yunhao and Wang, Xin and Li, Juncheng and Wang, Yixu and Li, Jie and Teng, Yan and Wang, Yingchun and Ma, Xingjun},

journal={arXiv preprint arXiv:2511.12710},

year={2025}

}

Contributing

Contributions are welcome! Please feel free to submit issues and enhancement requests.