mirror of

https://github.com/tdurieux/anonymous_github.git

synced 2026-02-16 12:22:43 +00:00

Compare commits

89 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

696a465d5c | ||

|

|

ecfd69bd37 | ||

|

|

d8de3f189a | ||

|

|

f72a662750 | ||

|

|

2f5d7a1089 | ||

|

|

92347fbcfb | ||

|

|

48ae137f96 | ||

|

|

84877506a6 | ||

|

|

275d4827a8 | ||

|

|

9fea119f50 | ||

|

|

68d96ad82e | ||

|

|

f54b9f355b | ||

|

|

e24d1b4630 | ||

|

|

406330d957 | ||

|

|

0997e19d3d | ||

|

|

897426743f | ||

|

|

2f916c6968 | ||

|

|

027f14ffbc | ||

|

|

4f6c1d25fc | ||

|

|

6c4363182b | ||

|

|

66d5d91e3e | ||

|

|

deba2b567e | ||

|

|

e5ffad6364 | ||

|

|

f1d6e4534d | ||

|

|

dde7fa2d72 | ||

|

|

abddf10c11 | ||

|

|

53ea31008a | ||

|

|

7d8b087a5d | ||

|

|

a23f089a8a | ||

|

|

ee82d3c12a | ||

|

|

6226f32471 | ||

|

|

3bf6864472 | ||

|

|

083026f168 | ||

|

|

35d796f871 | ||

|

|

7e2c490e4b | ||

|

|

c9acb7b899 | ||

|

|

8ac3a66a30 | ||

|

|

3627096e63 | ||

|

|

4293fa01b2 | ||

|

|

13e5e35d46 | ||

|

|

0a021d6e61 | ||

|

|

a07c8d4635 | ||

|

|

66341ec410 | ||

|

|

5d1eb333cf | ||

|

|

9ecfdae9d7 | ||

|

|

0afcb9733a | ||

|

|

3a55a4d5b0 | ||

|

|

e94a5f164a | ||

|

|

d29d4281ab | ||

|

|

f8a0315a1d | ||

|

|

ed0dd82cfb | ||

|

|

d3f9e67c62 | ||

|

|

344ecf2a33 | ||

|

|

ef1a2bfa4a | ||

|

|

f1fe8eff14 | ||

|

|

38d3e54d0b | ||

|

|

74aacd223d | ||

|

|

8221b2ee7f | ||

|

|

c59e202124 | ||

|

|

d825cc1d69 | ||

|

|

f3b8860838 | ||

|

|

a558a6c2bd | ||

|

|

7422a3a262 | ||

|

|

3c18884de2 | ||

|

|

1d4eb7a1b0 | ||

|

|

b6049c4ed2 | ||

|

|

7dbfdb3056 | ||

|

|

6caca33145 | ||

|

|

8c8f8dbd90 | ||

|

|

83a9505a11 | ||

|

|

74d625d6d4 | ||

|

|

2b10b10207 | ||

|

|

2a5f22a483 | ||

|

|

9cde774273 | ||

|

|

95354292b5 | ||

|

|

da194d9d71 | ||

|

|

fb9bbe105a | ||

|

|

99f837c3cf | ||

|

|

ec6098b3a1 | ||

|

|

3ab9b0c7a4 | ||

|

|

cff3636523 | ||

|

|

32d1884450 | ||

|

|

5c72f54db5 | ||

|

|

2e36b72a7f | ||

|

|

73f7582fd2 | ||

|

|

3eee62d6ad | ||

|

|

696b24a648 | ||

|

|

7c5fcfe069 | ||

|

|

6debb6aa0f |

@@ -1,3 +1,5 @@

|

||||

/repositories

|

||||

repo/

|

||||

db_backups

|

||||

db_backups

|

||||

build

|

||||

node_modules

|

||||

.github

|

||||

17

Dockerfile

17

Dockerfile

@@ -1,22 +1,25 @@

|

||||

FROM node:15-slim

|

||||

FROM node:18-slim

|

||||

|

||||

ENV PORT 5000

|

||||

EXPOSE $PORT

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

RUN npm install pm2 -g

|

||||

RUN pm2 install typescript

|

||||

RUN npm install pm2 -g && pm2 install typescript && npm cache clean --force;

|

||||

|

||||

COPY package.json .

|

||||

COPY package-lock.json .

|

||||

|

||||

RUN npm install

|

||||

|

||||

COPY tsconfig.json .

|

||||

COPY ecosystem.config.js .

|

||||

COPY healthcheck.js .

|

||||

COPY src .

|

||||

|

||||

COPY src ./src

|

||||

COPY public ./public

|

||||

COPY index.ts .

|

||||

COPY public .

|

||||

COPY config.ts .

|

||||

|

||||

RUN npm install && npm run build && npm cache clean --force

|

||||

|

||||

|

||||

CMD [ "pm2-runtime", "ecosystem.config.js"]

|

||||

75

README.md

75

README.md

@@ -1,46 +1,23 @@

|

||||

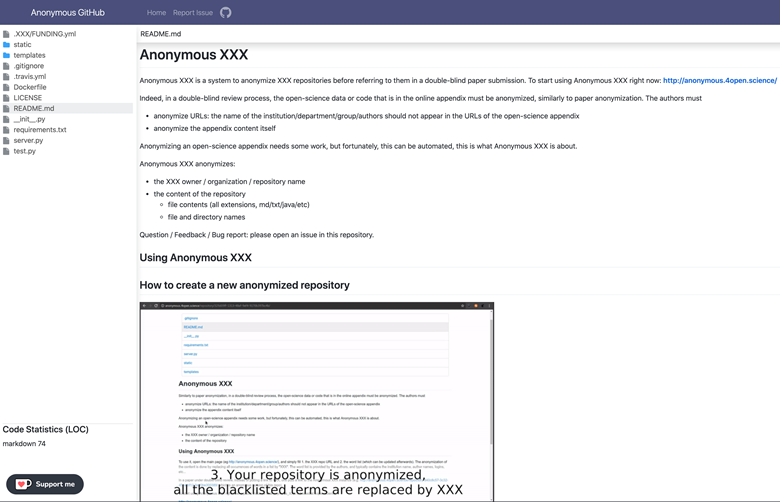

# Anonymous Github

|

||||

|

||||

Anonymous Github is a system to anonymize Github repositories before referring to them in a double-anonymous paper submission.

|

||||

To start using Anonymous Github right now: **[http://anonymous.4open.science/](http://anonymous.4open.science/)**

|

||||

Anonymous Github is a system that helps anonymize Github repositories for double-anonymous paper submissions. A public instance of Anonymous Github is hosted at https://anonymous.4open.science/.

|

||||

|

||||

Indeed, in a double-anonymous review process, the open-science data or code that is in the online appendix must be anonymized, similarly to paper anonymization. The authors must

|

||||

|

||||

|

||||

- anonymize URLs: the name of the institution/department/group/authors should not appear in the URLs of the open-science appendix

|

||||

- anonymize the appendix content itself

|

||||

|

||||

Anonymizing an open-science appendix needs some work, but fortunately, this can be automated, this is what Anonymous Github is about.

|

||||

Anonymous Github anonymizes the following:

|

||||

|

||||

Anonymous Github anonymizes:

|

||||

- Github repository owner, organization, and name

|

||||

- File and directory names

|

||||

- File contents of all extensions, including markdown, text, Java, etc.

|

||||

|

||||

- the Github owner / organization / repository name

|

||||

- the content of the repository

|

||||

- file contents (all extensions, md/txt/java/etc)

|

||||

- file and directory names

|

||||

## Usage

|

||||

|

||||

Question / Feedback / Bug report: please open an issue in this repository.

|

||||

### Public instance

|

||||

|

||||

## Using Anonymous Github

|

||||

**https://anonymous.4open.science/**

|

||||

|

||||

## How to create a new anonymized repository

|

||||

|

||||

To use it, open the main page (e.g., [http://anonymous.4open.science/](http://anonymous.4open.science/)), login with GitHub, and click on "Anonymize".

|

||||

Simply fill 1. the Github repo URL and 2. the id of the anonymized repository, 3. the terms to anonymize (which can be updated afterward).

|

||||

The anonymization of the content is done by replacing all occurrences of words in a list by "XXXX" (can be changed in the configuration).

|

||||

The word list is provided by the authors, and typically contains the institution name, author names, logins, etc...

|

||||

The README is anonymized as well as all files of the repository. Even filenames are anonymized.

|

||||

|

||||

In a paper under double-anonymous review, instead of putting a link to Github, one puts a link to the Anonymous Github instance (e.g.

|

||||

<http://anonymous.4open.science/r/840c8c57-3c32-451e-bf12-0e20be300389/> which is an anonymous version of this repo).

|

||||

|

||||

To start using Anonymous Github right now, a public instance of anonymous_github is hosted at 4open.science:

|

||||

|

||||

**[http://anonymous.4open.science/](http://anonymous.4open.science/)**

|

||||

|

||||

## What is the scope of anonymization?

|

||||

|

||||

In double-anonymous peer-review, the boundary of anonymization is the paper plus its online appendix, and only this, it's not the whole world. Googling any part of the paper or the online appendix can be considered as a deliberate attempt to break anonymity ([explanation](http://www.monperrus.net/martin/open-science-double-anonymous))

|

||||

|

||||

## CLI

|

||||

### CLI

|

||||

|

||||

This CLI tool allows you to anonymize your GitHub repositories locally, generating an anonymized zip file based on your configuration settings.

|

||||

|

||||

@@ -51,13 +28,10 @@ npm install -g @tdurieux/anonymous_github

|

||||

# Run the Anonymous GitHub CLI tool

|

||||

anonymous_github

|

||||

```

|

||||

## How does it work?

|

||||

|

||||

Anonymous Github either download the complete repository and anonymize the content of the file or proxy the request to GitHub. In both case, the original and anonymized versions of the file are cached on the server.

|

||||

### Own instance

|

||||

|

||||

## Installing Anonymous Github

|

||||

|

||||

1. Clone the repository

|

||||

#### 1. Clone the repository

|

||||

|

||||

```bash

|

||||

git clone https://github.com/tdurieux/anonymous_github/

|

||||

@@ -65,9 +39,9 @@ cd anonymous_github

|

||||

npm i

|

||||

```

|

||||

|

||||

2. Configure the Github token

|

||||

#### 2. Configure the GitHub token

|

||||

|

||||

Create a file `.env` that contains

|

||||

Create a `.env` file with the following contents:

|

||||

|

||||

```env

|

||||

GITHUB_TOKEN=<GITHUB_TOKEN>

|

||||

@@ -79,19 +53,27 @@ DB_PASSWORD=

|

||||

AUTH_CALLBACK=http://localhost:5000/github/auth,

|

||||

```

|

||||

|

||||

`GITHUB_TOKEN` can be generated here: https://github.com/settings/tokens/new with `repo` scope.

|

||||

`CLIENT_ID` and `CLIENT_SECRET` are the tokens are generated when you create a new GitHub app https://github.com/settings/applications/new.

|

||||

The callback of the GitHub app needs to be defined as `https://<host>/github/auth` (the same as defined in AUTH_CALLBACK).

|

||||

- `GITHUB_TOKEN` can be generated here: https://github.com/settings/tokens/new with `repo` scope.

|

||||

- `CLIENT_ID` and `CLIENT_SECRET` are the tokens are generated when you create a new GitHub app https://github.com/settings/applications/new.

|

||||

- The callback of the GitHub app needs to be defined as `https://<host>/github/auth` (the same as defined in AUTH_CALLBACK).

|

||||

|

||||

3. Run Anonymous Github

|

||||

#### 3. Start Anonymous Github server

|

||||

|

||||

```bash

|

||||

docker-compose up -d

|

||||

```

|

||||

|

||||

4. Go to Anonymous Github

|

||||

#### 4. Go to Anonymous Github

|

||||

|

||||

By default, Anonymous Github uses port 5000. It can be changed in `docker-compose.yml`.

|

||||

Go to http://localhost:5000. By default, Anonymous Github uses port 5000. It can be changed in `docker-compose.yml`. I would recommand to put Anonymous GitHub behind ngnix to handle the https certificates.

|

||||

|

||||

## What is the scope of anonymization?

|

||||

|

||||

In double-anonymous peer-review, the boundary of anonymization is the paper plus its online appendix, and only this, it's not the whole world. Googling any part of the paper or the online appendix can be considered as a deliberate attempt to break anonymity ([explanation](https://www.monperrus.net/martin/open-science-double-blind))

|

||||

|

||||

## How does it work?

|

||||

|

||||

Anonymous Github either download the complete repository and anonymize the content of the file or proxy the request to GitHub. In both case, the original and anonymized versions of the file are cached on the server.

|

||||

|

||||

## Related tools

|

||||

|

||||

@@ -102,3 +84,4 @@ By default, Anonymous Github uses port 5000. It can be changed in `docker-compos

|

||||

## See also

|

||||

|

||||

- [Open-science and double-anonymous Peer-Review](https://www.monperrus.net/martin/open-science-double-blind)

|

||||

- [ACM Policy on Double-Blind Reviewing](https://dl.acm.org/journal/tods/DoubleBlindPolicy)

|

||||

|

||||

60

cli.ts

60

cli.ts

@@ -2,18 +2,20 @@

|

||||

|

||||

import { config as dot } from "dotenv";

|

||||

dot();

|

||||

process.env.STORAGE = "filesystem";

|

||||

|

||||

import { writeFile } from "fs/promises";

|

||||

import { join } from "path";

|

||||

import { tmpdir } from "os";

|

||||

|

||||

import * as gh from "parse-github-url";

|

||||

import * as inquirer from "inquirer";

|

||||

|

||||

import server from "./src/server";

|

||||

import config from "./config";

|

||||

import GitHubDownload from "./src/source/GitHubDownload";

|

||||

import Repository from "./src/Repository";

|

||||

import AnonymizedRepositoryModel from "./src/database/anonymizedRepositories/anonymizedRepositories.model";

|

||||

import { getRepositoryFromGitHub } from "./src/source/GitHubRepository";

|

||||

|

||||

function generateRandomFileName(size: number) {

|

||||

const characters =

|

||||

@@ -44,18 +46,47 @@ async function main() {

|

||||

name: "terms",

|

||||

message: `Terms to remove from your repository (separated with comma).`,

|

||||

},

|

||||

{

|

||||

type: "string",

|

||||

name: "output",

|

||||

message: `The output folder where to save the zipped repository.`,

|

||||

default: process.cwd(),

|

||||

},

|

||||

]);

|

||||

|

||||

const ghURL = gh(inq.repo) || { owner: "", name: "", branch: "", commit: "" };

|

||||

const ghURL = gh(inq.repo) || {

|

||||

owner: undefined,

|

||||

name: undefined,

|

||||

branch: undefined,

|

||||

commit: undefined,

|

||||

};

|

||||

|

||||

if (!ghURL.owner || !ghURL.name) {

|

||||

throw new Error("Invalid GitHub URL");

|

||||

}

|

||||

|

||||

const ghRepo = await getRepositoryFromGitHub({

|

||||

accessToken: inq.token,

|

||||

owner: ghURL.owner,

|

||||

repo: ghURL.name,

|

||||

});

|

||||

const branches = await ghRepo.branches({

|

||||

accessToken: inq.token,

|

||||

force: true,

|

||||

});

|

||||

const branchToFind = inq.repo.includes(ghURL.branch)

|

||||

? ghURL.branch

|

||||

: ghRepo.model.defaultBranch || "master";

|

||||

const branch = branches.find((b) => b.name === branchToFind);

|

||||

|

||||

const repository = new Repository(

|

||||

new AnonymizedRepositoryModel({

|

||||

repoId: "test",

|

||||

repoId: `${ghURL.name}-${branch?.name}`,

|

||||

source: {

|

||||

type: "GitHubDownload",

|

||||

accessToken: inq.token,

|

||||

branch: ghURL.branch || "master",

|

||||

commit: ghURL.branch || "HEAD",

|

||||

branch: branchToFind,

|

||||

commit: branch?.commit || "HEAD",

|

||||

repositoryName: `${ghURL.owner}/${ghURL.name}`,

|

||||

},

|

||||

options: {

|

||||

@@ -71,27 +102,20 @@ async function main() {

|

||||

})

|

||||

);

|

||||

|

||||

const source = new GitHubDownload(

|

||||

{

|

||||

type: "GitHubDownload",

|

||||

accessToken: inq.token,

|

||||

repositoryName: inq.repo,

|

||||

},

|

||||

repository

|

||||

console.info(

|

||||

`[INFO] Downloading repository: ${repository.model.source.repositoryName} from branch ${repository.model.source.branch} and commit ${repository.model.source.commit}...`

|

||||

);

|

||||

|

||||

console.info("[INFO] Downloading repository...");

|

||||

await source.download(inq.token);

|

||||

const outputFileName = join(tmpdir(), generateRandomFileName(8) + ".zip");

|

||||

await (repository.source as GitHubDownload).download(inq.token);

|

||||

const outputFileName = join(inq.output, generateRandomFileName(8) + ".zip");

|

||||

console.info("[INFO] Anonymizing repository and creation zip file...");

|

||||

await writeFile(outputFileName, repository.zip());

|

||||

await writeFile(outputFileName, await repository.zip());

|

||||

console.log(`Anonymized repository saved at ${outputFileName}`);

|

||||

}

|

||||

|

||||

if (require.main === module) {

|

||||

if (process.argv[2] == "server") {

|

||||

// start the server

|

||||

require("./src/server").default();

|

||||

server();

|

||||

} else {

|

||||

// use the cli interface

|

||||

main();

|

||||

|

||||

16

config.ts

16

config.ts

@@ -1,6 +1,7 @@

|

||||

import { resolve } from "path";

|

||||

|

||||

interface Config {

|

||||

SESSION_SECRET: string;

|

||||

REDIS_PORT: number;

|

||||

REDIS_HOSTNAME: string;

|

||||

CLIENT_ID: string;

|

||||

@@ -19,7 +20,7 @@ interface Config {

|

||||

ENABLE_DOWNLOAD: boolean;

|

||||

ANONYMIZATION_MASK: string;

|

||||

PORT: number;

|

||||

HOSTNAME: string;

|

||||

APP_HOSTNAME: string;

|

||||

DB_USERNAME: string;

|

||||

DB_PASSWORD: string;

|

||||

DB_HOSTNAME: string;

|

||||

@@ -35,6 +36,7 @@ interface Config {

|

||||

RATE_LIMIT: number;

|

||||

}

|

||||

const config: Config = {

|

||||

SESSION_SECRET: "SESSION_SECRET",

|

||||

CLIENT_ID: "CLIENT_ID",

|

||||

CLIENT_SECRET: "CLIENT_SECRET",

|

||||

GITHUB_TOKEN: "",

|

||||

@@ -50,7 +52,7 @@ const config: Config = {

|

||||

PORT: 5000,

|

||||

TRUST_PROXY: 1,

|

||||

RATE_LIMIT: 350,

|

||||

HOSTNAME: "anonymous.4open.science",

|

||||

APP_HOSTNAME: "anonymous.4open.science",

|

||||

DB_USERNAME: "admin",

|

||||

DB_PASSWORD: "password",

|

||||

DB_HOSTNAME: "mongodb",

|

||||

@@ -68,11 +70,11 @@ const config: Config = {

|

||||

"in",

|

||||

],

|

||||

STORAGE: "filesystem",

|

||||

S3_BUCKET: null,

|

||||

S3_CLIENT_ID: null,

|

||||

S3_CLIENT_SECRET: null,

|

||||

S3_ENDPOINT: null,

|

||||

S3_REGION: null,

|

||||

S3_BUCKET: process.env.S3_BUCKET,

|

||||

S3_CLIENT_ID: process.env.S3_CLIENT_ID,

|

||||

S3_CLIENT_SECRET: process.env.S3_CLIENT_SECRET,

|

||||

S3_ENDPOINT: process.env.S3_ENDPOINT,

|

||||

S3_REGION: process.env.S3_REGION,

|

||||

};

|

||||

|

||||

for (let conf in process.env) {

|

||||

|

||||

@@ -10,8 +10,6 @@ services:

|

||||

environment:

|

||||

- REDIS_HOSTNAME=redis

|

||||

- DB_HOSTNAME=mongodb

|

||||

volumes:

|

||||

- .:/app

|

||||

ports:

|

||||

- $PORT:$PORT

|

||||

healthcheck:

|

||||

@@ -28,12 +26,19 @@ services:

|

||||

|

||||

redis:

|

||||

image: "redis:alpine"

|

||||

|

||||

restart: always

|

||||

healthcheck:

|

||||

test:

|

||||

- CMD

|

||||

- redis-cli

|

||||

- ping

|

||||

interval: 10s

|

||||

timeout: 10s

|

||||

retries: 5

|

||||

|

||||

mongodb:

|

||||

image: mongo:latest

|

||||

restart: on-failure

|

||||

ports:

|

||||

- "127.0.0.1:27017:27017"

|

||||

environment:

|

||||

MONGO_INITDB_ROOT_USERNAME: $DB_USERNAME

|

||||

MONGO_INITDB_ROOT_PASSWORD: $DB_PASSWORD

|

||||

|

||||

@@ -2,9 +2,9 @@ module.exports = {

|

||||

apps: [

|

||||

{

|

||||

name: "AnonymousGitHub",

|

||||

script: "./index.ts",

|

||||

script: "build/index.js",

|

||||

exec_mode: "fork",

|

||||

watch: true,

|

||||

watch: false,

|

||||

ignore_watch: [

|

||||

"node_modules",

|

||||

"repositories",

|

||||

@@ -12,10 +12,9 @@ module.exports = {

|

||||

"public",

|

||||

".git",

|

||||

"db_backups",

|

||||

"dist",

|

||||

"build",

|

||||

],

|

||||

interpreter: "node",

|

||||

interpreter_args: "--require ts-node/register",

|

||||

},

|

||||

],

|

||||

};

|

||||

|

||||

7023

package-lock.json

generated

7023

package-lock.json

generated

File diff suppressed because it is too large

Load Diff

41

package.json

41

package.json

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@tdurieux/anonymous_github",

|

||||

"version": "2.1.1",

|

||||

"version": "2.2.0",

|

||||

"description": "Anonymise Github repositories for double-anonymous reviews",

|

||||

"bin": {

|

||||

"anonymous_github": "build/cli.js"

|

||||

@@ -10,7 +10,7 @@

|

||||

"start": "node --inspect=5858 -r ts-node/register ./index.ts",

|

||||

"dev": "nodemon --transpile-only index.ts",

|

||||

"migrateDB": "ts-node --transpile-only migrateDB.ts",

|

||||

"build": "tsc"

|

||||

"build": "rm -rf build && tsc"

|

||||

},

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@@ -30,58 +30,61 @@

|

||||

"build"

|

||||

],

|

||||

"dependencies": {

|

||||

"@octokit/oauth-app": "^4.1.0",

|

||||

"@octokit/rest": "^19.0.5",

|

||||

"@aws-sdk/client-s3": "^3.374.0",

|

||||

"@aws-sdk/node-http-handler": "^3.374.0",

|

||||

"@octokit/oauth-app": "^6.0.0",

|

||||

"@octokit/plugin-paginate-rest": "^8.0.0",

|

||||

"@octokit/rest": "^20.0.1",

|

||||

"@pm2/io": "^5.0.0",

|

||||

"archiver": "^5.3.1",

|

||||

"aws-sdk": "^2.1238.0",

|

||||

"bullmq": "^2.3.2",

|

||||

"compression": "^1.7.4",

|

||||

"connect-redis": "^6.1.3",

|

||||

"decompress-stream-to-s3": "^1.3.1",

|

||||

"connect-redis": "^7.0.1",

|

||||

"decompress-stream-to-s3": "^2.1.1",

|

||||

"dotenv": "^16.0.3",

|

||||

"express": "^4.18.2",

|

||||

"express-rate-limit": "^6.6.0",

|

||||

"express-rate-limit": "^6.8.0",

|

||||

"express-session": "^1.17.3",

|

||||

"express-slow-down": "^1.5.0",

|

||||

"express-slow-down": "^1.6.0",

|

||||

"got": "^11.8.5",

|

||||

"inquirer": "^8.2.5",

|

||||

"istextorbinary": "^6.0.0",

|

||||

"marked": "^4.1.1",

|

||||

"marked": "^5.1.2",

|

||||

"mime-types": "^2.1.35",

|

||||

"mongoose": "^6.6.7",

|

||||

"node-schedule": "^2.1.0",

|

||||

"mongoose": "^7.4.1",

|

||||

"node-schedule": "^2.1.1",

|

||||

"parse-github-url": "^1.0.2",

|

||||

"passport": "^0.6.0",

|

||||

"passport-github2": "^0.1.12",

|

||||

"rate-limit-redis": "^3.0.1",

|

||||

"redis": "^4.3.1",

|

||||

"textextensions": "^5.15.0",

|

||||

"ts-custom-error": "^3.3.0",

|

||||

"rate-limit-redis": "^3.0.2",

|

||||

"redis": "^4.6.7",

|

||||

"textextensions": "^5.16.0",

|

||||

"ts-custom-error": "^3.3.1",

|

||||

"unzip-stream": "^0.3.1",

|

||||

"xml-flow": "^1.0.4"

|

||||

},

|

||||

"devDependencies": {

|

||||

"@types/archiver": "^5.3.1",

|

||||

"@types/compression": "^1.7.1",

|

||||

"@types/connect-redis": "^0.0.18",

|

||||

"@types/connect-redis": "^0.0.20",

|

||||

"@types/express": "^4.17.14",

|

||||

"@types/express-rate-limit": "^6.0.0",

|

||||

"@types/express-session": "^1.17.5",

|

||||

"@types/express-slow-down": "^1.3.2",

|

||||

"@types/got": "^9.6.12",

|

||||

"@types/inquirer": "^8.0.0",

|

||||

"@types/marked": "^4.0.7",

|

||||

"@types/marked": "^5.0.1",

|

||||

"@types/mime-types": "^2.1.0",

|

||||

"@types/node-schedule": "^2.1.0",

|

||||

"@types/parse-github-url": "^1.0.0",

|

||||

"@types/passport": "^1.0.11",

|

||||

"@types/passport-github2": "^1.2.5",

|

||||

"@types/rate-limit-redis": "^1.7.4",

|

||||

"@types/tar-fs": "^2.0.1",

|

||||

"@types/unzip-stream": "^0.3.1",

|

||||

"@types/xml-flow": "^1.0.1",

|

||||

"chai": "^4.3.6",

|

||||

"mocha": "^10.1.0",

|

||||

"nodemon": "^3.0.1",

|

||||

"ts-node": "^10.9.1",

|

||||

"typescript": "^4.8.4"

|

||||

},

|

||||

|

||||

@@ -133,6 +133,12 @@ a:hover {

|

||||

color: var(--link-hover-color);

|

||||

}

|

||||

|

||||

.markdown-body .emoji {

|

||||

height: 1.3em;

|

||||

margin: 0;

|

||||

vertical-align: -0.1em;

|

||||

}

|

||||

|

||||

.navbar {

|

||||

background: var(--header-bg-color) !important;

|

||||

}

|

||||

|

||||

@@ -1,5 +1,8 @@

|

||||

{

|

||||

"ERRORS": {

|

||||

"unknown_error": "Unknown error, contact the admin.",

|

||||

"unreachable": "Anonymous GitHub is unreachable, contact the admin.",

|

||||

"request_error": "Unable to download the file, check your connection or contact the admin.",

|

||||

"repo_not_found": "The repository is not found.",

|

||||

"repo_not_accessible": "Anonymous GitHub is unable to or is forbidden to access the repository.",

|

||||

"repository_expired": "The repository is expired",

|

||||

|

||||

@@ -50,19 +50,23 @@

|

||||

<script src="/script/external/angular-translate-loader-static-files.min.js"></script>

|

||||

<script src="/script/external/angular-sanitize.min.js"></script>

|

||||

<script src="/script/external/angular-route.min.js"></script>

|

||||

<script src="/script/external/ana.min.js"></script>

|

||||

|

||||

<script src="/script/external/jquery-3.4.1.min.js"></script>

|

||||

<script src="/script/external/popper.min.js"></script>

|

||||

<script src="/script/external/bootstrap.min.js"></script>

|

||||

|

||||

<!-- PDF -->

|

||||

<script src="/script/external/pdf.compat.js"></script>

|

||||

<script src="/script/external/pdf.js"></script>

|

||||

|

||||

<!-- Code -->

|

||||

<script src="/script/external/ace/ace.js"></script>

|

||||

<script src="/script/external/ui-ace.min.js"></script>

|

||||

<script src="/script/langColors.js"></script>

|

||||

|

||||

<!-- Notebook -->

|

||||

<script src="/script/external/github-emojis.js"></script>

|

||||

<script src="/script/external/marked-emoji.js"></script>

|

||||

<script src="/script/external/marked.min.js"></script>

|

||||

<script src="/script/external/purify.min.js"></script>

|

||||

<script src="/script/external/ansi_up.min.js"></script>

|

||||

|

||||

@@ -148,5 +148,79 @@

|

||||

There is no job to display.

|

||||

</li>

|

||||

</ul>

|

||||

<h1>Remove Cache</h1>

|

||||

<ul class="p-0 m-0 w-100">

|

||||

<li

|

||||

class="col-12 d-flex px-0 py-3 border-bottom color-border-secondary"

|

||||

ng-repeat="job in removeCaches as filteredRemoveCache"

|

||||

>

|

||||

<div class="w-100">

|

||||

<div class="">

|

||||

<h3>

|

||||

<a target="__blank" ng-href="/r/{{job.id}}" ng-bind="job.id"></a>

|

||||

<span class="badge" ng-bind="job.progress.status | title"></span>

|

||||

</h3>

|

||||

</div>

|

||||

<div class="color-text-secondary mb-1">

|

||||

<span ng-if="job.timestamp">

|

||||

Created on:

|

||||

<span ng-bind="job.timestamp | humanTime"></span>

|

||||

</span>

|

||||

<span ng-if="job.finishedOn">

|

||||

Finished on:

|

||||

<span ng-bind="job.finishedOn | humanTime"></span>

|

||||

</span>

|

||||

<span ng-if="job.processedOn">

|

||||

Processed on:

|

||||

<span ng-bind="job.processedOn | humanTime"></span>

|

||||

</span>

|

||||

</div>

|

||||

<div>

|

||||

<pre

|

||||

ng-repeat="stack in job.stacktrace track by $index"

|

||||

><code ng-bind="stack"></code></pre>

|

||||

</div>

|

||||

</div>

|

||||

<div class="d-flex">

|

||||

<div class="dropdown">

|

||||

<button

|

||||

class="btn black_border dropdown-toggle btn-sm"

|

||||

type="button"

|

||||

id="dropdownMenuButton"

|

||||

data-toggle="dropdown"

|

||||

aria-haspopup="true"

|

||||

aria-expanded="false"

|

||||

>

|

||||

Actions

|

||||

</button>

|

||||

<div class="dropdown-menu" aria-labelledby="dropdownMenuButton">

|

||||

<a

|

||||

class="dropdown-item"

|

||||

href="#"

|

||||

ng-click="removeJob('remove', job)"

|

||||

>

|

||||

<i class="fas fa-trash-alt"></i> Remove

|

||||

</a>

|

||||

<a

|

||||

class="dropdown-item"

|

||||

href="#"

|

||||

ng-click="retryJob('remove', job)"

|

||||

>

|

||||

<i class="fas fa-sync"></i> Retry

|

||||

</a>

|

||||

<a class="dropdown-item" href="/anonymize/{{job.id}}">

|

||||

<i class="far fa-edit" aria-hidden="true"></i> Edit

|

||||

</a>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

</li>

|

||||

<li

|

||||

class="col-12 d-flex px-0 py-3 border-bottom color-border-secondary"

|

||||

ng-if="filteredRemoveCache.length == 0"

|

||||

>

|

||||

There is no job to display.

|

||||

</li>

|

||||

</ul>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

@@ -313,6 +313,9 @@

|

||||

Actions

|

||||

</button>

|

||||

<div class="dropdown-menu" aria-labelledby="dropdownMenuButton">

|

||||

<a class="dropdown-item" href="#" ng-click="removeCache(repo)">

|

||||

<i class="fas fa-trash-alt"></i> Remove Cache

|

||||

</a>

|

||||

<a class="dropdown-item" href="/anonymize/{{repo.repoId}}">

|

||||

<i class="far fa-edit" aria-hidden="true"></i> Edit

|

||||

</a>

|

||||

|

||||

@@ -1,9 +1,15 @@

|

||||

<div class="container page">

|

||||

<div class="row">

|

||||

<h1>

|

||||

<img ng-src="{{userInfo.photo}}" ng-if="userInfo.photo" width="30" height="30" class="rounded-circle ng-scope">

|

||||

<img

|

||||

ng-src="{{userInfo.photo}}"

|

||||

ng-if="userInfo.photo"

|

||||

width="30"

|

||||

height="30"

|

||||

class="rounded-circle ng-scope"

|

||||

/>

|

||||

{{userInfo.username}}

|

||||

<span class="badge"><span ng-bind="userInfo.status | title"></span>

|

||||

<span class="badge"><span ng-bind="userInfo.status | title"></span></span>

|

||||

</h1>

|

||||

<div class="row mb-3 m-0 py-2 border">

|

||||

<div class="col-2 font-weight-bold">ID</div>

|

||||

@@ -16,12 +22,47 @@

|

||||

<div class="col-10">{{userInfo.accessTokens.github}}</div>

|

||||

|

||||

<div class="col-2 font-weight-bold">Github</div>

|

||||

<div class="col-10"><a ng-href="https://github.com/{{userInfo.username}}">{{userInfo.username}}</a></div>

|

||||

<div class="col-10">

|

||||

<a ng-href="https://github.com/{{userInfo.username}}"

|

||||

>{{userInfo.username}}</a

|

||||

>

|

||||

</div>

|

||||

|

||||

<div class="col-2 font-weight-bold">Github Repositories</div>

|

||||

<div class="col-10">{{userInfo.repositories.length}}</a></div>

|

||||

<div class="col-10" ng-click="showRepos =!showRepos">

|

||||

{{userInfo.repositories.length}}

|

||||

</div>

|

||||

<button

|

||||

class="btn btn-primary m-1 mx-3"

|

||||

ng-click="getGitHubRepositories()"

|

||||

>

|

||||

Regresh Repositories

|

||||

</button>

|

||||

<ul class="m-0 col-12" ng-if="showRepos">

|

||||

<li

|

||||

class="col-12 d-flex px-0 py-3 border-bottom color-border-secondary"

|

||||

ng-repeat="repo in userInfo.repositories"

|

||||

>

|

||||

<div class="w-100">

|

||||

<div class="">

|

||||

{{repo.name}}

|

||||

</div>

|

||||

<div class="color-text-secondary mt-2">

|

||||

<span

|

||||

class="ml-0 mr-3"

|

||||

title="Size: {{::repo.size | humanFileSize}}"

|

||||

data-toggle="tooltip"

|

||||

data-placement="bottom"

|

||||

>

|

||||

<i class="fas fa-database"></i> {{::repo.size |

|

||||

humanFileSize}}</span

|

||||

>

|

||||

</div>

|

||||

</div>

|

||||

</li>

|

||||

</ul>

|

||||

</div>

|

||||

|

||||

|

||||

<h3>Repositories {{repositories.length}}</h3>

|

||||

<div class="border-bottom color-border-secondary py-3 w-100">

|

||||

<div class="d-flex flex-items-start w-100">

|

||||

@@ -245,6 +286,64 @@

|

||||

>

|

||||

</div>

|

||||

</div>

|

||||

<div class="d-flex">

|

||||

<div class="dropdown">

|

||||

<button

|

||||

class="btn black_border dropdown-toggle btn-sm"

|

||||

type="button"

|

||||

id="dropdownMenuButton"

|

||||

data-toggle="dropdown"

|

||||

aria-haspopup="true"

|

||||

aria-expanded="false"

|

||||

>

|

||||

Actions

|

||||

</button>

|

||||

<div class="dropdown-menu" aria-labelledby="dropdownMenuButton">

|

||||

<a class="dropdown-item" href="#" ng-click="removeCache(repo)">

|

||||

<i class="fas fa-trash-alt"></i> Remove Cache

|

||||

</a>

|

||||

<a class="dropdown-item" href="/anonymize/{{repo.repoId}}">

|

||||

<i class="far fa-edit" aria-hidden="true"></i> Edit

|

||||

</a>

|

||||

<a

|

||||

class="dropdown-item"

|

||||

href="#"

|

||||

ng-show="repo.status == 'ready' || repo.status == 'error'"

|

||||

ng-click="updateRepository(repo)"

|

||||

>

|

||||

<i class="fas fa-sync"></i> Force update

|

||||

</a>

|

||||

<a

|

||||

class="dropdown-item"

|

||||

href="#"

|

||||

ng-show="repo.status == 'removed'"

|

||||

ng-click="updateRepository(repo)"

|

||||

>

|

||||

<i class="fas fa-check-circle"></i>

|

||||

Enable

|

||||

</a>

|

||||

<a

|

||||

class="dropdown-item"

|

||||

href="#"

|

||||

ng-show="repo.status == 'ready'"

|

||||

ng-click="removeRepository(repo)"

|

||||

>

|

||||

<i class="fas fa-trash-alt"></i> Remove

|

||||

</a>

|

||||

<a class="dropdown-item" href="/r/{{repo.repoId}}/">

|

||||

<i class="fa fa-eye" aria-hidden="true"></i> View Repo

|

||||

</a>

|

||||

<a

|

||||

class="dropdown-item"

|

||||

href="/w/{{repo.repoId}}/"

|

||||

target="_self"

|

||||

ng-if="repo.options.page && repo.status == 'ready'"

|

||||

>

|

||||

<i class="fas fa-globe"></i> View Page

|

||||

</a>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

</li>

|

||||

<li

|

||||

class="col-12 d-flex px-0 py-3 border-bottom color-border-secondary"

|

||||

|

||||

@@ -13,14 +13,9 @@

|

||||

name="anonymize"

|

||||

novalidate

|

||||

>

|

||||

<h5 class="card-title">Anonymize a repository</h5>

|

||||

<h6 class="card-subtitle mb-2 text-muted">

|

||||

Fill the information to anonymize! It will only take 5min.

|

||||

</h6>

|

||||

<h2>Source</h2>

|

||||

<h3 class="card-title mb-3">Anonymize your repository</h3>

|

||||

<!-- repoUrl -->

|

||||

<div class="form-group">

|

||||

<label for="repoUrl">Type the url of your repository</label>

|

||||

<div class="form-group mb-0">

|

||||

<input

|

||||

type="text"

|

||||

class="form-control"

|

||||

@@ -28,6 +23,7 @@

|

||||

id="repoUrl"

|

||||

ng-class="{'is-invalid': anonymize.repoUrl.$invalid}"

|

||||

ng-model="repoUrl"

|

||||

placeholder="URL of your GitHub repository"

|

||||

ng-model-options="{ debounce: {default: 1000, blur: 0, click: 0}, updateOn: 'default blur click' }"

|

||||

ng-change="repoSelected()"

|

||||

/>

|

||||

@@ -58,37 +54,6 @@

|

||||

{{repoUrl}} is already anonymized

|

||||

</div>

|

||||

</div>

|

||||

<!-- select repo -->

|

||||

<div class="form-group" ng-hide="repoUrl">

|

||||

<label for="repositories">Or select one of your repository</label>

|

||||

<div class="input-group mb-3">

|

||||

<select

|

||||

class="form-control"

|

||||

id="repositories"

|

||||

name="repositories"

|

||||

ng-model="repoUrl"

|

||||

ng-change="repoSelected()"

|

||||

>

|

||||

<option selected value="">None</option>

|

||||

<option

|

||||

ng-repeat="repo in repositories|orderBy:'fullName'"

|

||||

value="https://github.com/{{ repo.fullName }}"

|

||||

ng-bind="repo.fullName"

|

||||

></option>

|

||||

</select>

|

||||

<div class="input-group-append">

|

||||

<button

|

||||

class="btn btn-outline-secondary"

|

||||

ng-click="getRepositories(true)"

|

||||

title="Refresh!"

|

||||

data-toggle="tooltip"

|

||||

data-placement="bottom"

|

||||

>

|

||||

<i class="fa fa-undo"></i>

|

||||

</button>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

<div ng-show="repoUrl">

|

||||

<!-- Branch -->

|

||||

<div class="form-group">

|

||||

@@ -386,29 +351,6 @@

|

||||

>Display Notebooks</label

|

||||

>

|

||||

</div>

|

||||

|

||||

<div class="form-group">

|

||||

<label for="mode">Proxy mode</label>

|

||||

<select

|

||||

class="form-control"

|

||||

id="mode"

|

||||

name="mode"

|

||||

ng-model="source.type"

|

||||

>

|

||||

<option value="GitHubStream" selected>Stream</option>

|

||||

<option value="GitHubDownload">Download</option>

|

||||

</select>

|

||||

<small class="form-text text-muted"

|

||||

>How the repository will be anonymized. Stream mode

|

||||

will request the content on the flight. This is the

|

||||

only option for repositories bigger than

|

||||

{{site_options.MAX_REPO_SIZE * 1024| humanFileSize}}.

|

||||

This repository is {{details.size * 8 *1024 |

|

||||

humanFileSize}}. Download will download the repository

|

||||

the repository on the anonymous.4open.science server,

|

||||

it is faster and offer more features.</small

|

||||

>

|

||||

</div>

|

||||

</div>

|

||||

<div class="form-group">

|

||||

<div class="form-check">

|

||||

|

||||

@@ -242,7 +242,7 @@

|

||||

<div class="d-flex d-inline-flex mt-2">

|

||||

<div class="alert alert-info alert-dismissible my-0 ml-1" ng-show="!v" role="alert" ng-repeat="(f, v) in filters.status">

|

||||

<strong>{{f | title}}</strong>

|

||||

<button type="button" class="close" data-dismiss="alert" aria-label="Close">

|

||||

<button type="button" class="close" data-dismiss="alert" aria-label="Close" ng-click="filters.status[f] = true;">

|

||||

<span aria-hidden="true">×</span>

|

||||

</button>

|

||||

</div>

|

||||

|

||||

@@ -3,7 +3,12 @@

|

||||

<div class="leftCol shadow p-1 overflow-auto" ng-show="files">

|

||||

<tree class="files" file="files"></tree>

|

||||

<div class="bottom column">

|

||||

<div class="last-update">

|

||||

<div

|

||||

class="last-update"

|

||||

data-toggle="tooltip"

|

||||

data-placement="top"

|

||||

title="{{options.lastUpdateDate}}"

|

||||

>

|

||||

Last Update: {{options.lastUpdateDate|date}}

|

||||

</div>

|

||||

</div>

|

||||

@@ -20,6 +25,12 @@

|

||||

ng-href="{{url}}"

|

||||

target="__self"

|

||||

class="btn btn-outline-primary btn-sm"

|

||||

>View raw</a

|

||||

>

|

||||

<a

|

||||

ng-href="{{url}}?download=true"

|

||||

target="__self"

|

||||

class="btn btn-outline-primary btn-sm"

|

||||

>Download file</a

|

||||

>

|

||||

<a

|

||||

|

||||

@@ -2,6 +2,7 @@

|

||||

<div ng-if="type == 'html'" ng-bind-html="content" class="file-content markdown-body"></div>

|

||||

<div ng-if="type == 'code' && content != null" ui-ace="aceOption" ng-model="content"></div>

|

||||

<img ng-if="type == 'image'" class="image-content" ng-src="{{url}}"></img>

|

||||

<iframe class="h-100 overflow-auto w-100 b-0" ng-if="type == 'media'" ng-src="{{url}}"></iframe>

|

||||

<div class="h-100 overflow-auto" ng-if="type == 'pdf'">

|

||||

<pdfviewer class="h-100 overflow-auto" src="{{url}}" id="viewer"></pdfviewer>

|

||||

</div>

|

||||

|

||||

@@ -20,22 +20,56 @@ angular

|

||||

$scope.query = {

|

||||

page: 1,

|

||||

limit: 25,

|

||||

sort: "source.repositoryName",

|

||||

sort: "lastView",

|

||||

search: "",

|

||||

ready: true,

|

||||

expired: true,

|

||||

removed: true,

|

||||

ready: false,

|

||||

expired: false,

|

||||

removed: false,

|

||||

error: true,

|

||||

preparing: true,

|

||||

};

|

||||

|

||||

$scope.removeCache = (repo) => {

|

||||

$http.delete("/api/admin/repos/" + repo.repoId).then(

|

||||

(res) => {

|

||||

$scope.$apply();

|

||||

},

|

||||

(err) => {

|

||||

console.error(err);

|

||||

}

|

||||

);

|

||||

};

|

||||

|

||||

$scope.updateRepository = (repo) => {

|

||||

const toast = {

|

||||

title: `Refreshing ${repo.repoId}...`,

|

||||

date: new Date(),

|

||||

body: `The repository ${repo.repoId} is going to be refreshed.`,

|

||||

};

|

||||

$scope.toasts.push(toast);

|

||||

repo.s;

|

||||

|

||||

$http.post(`/api/repo/${repo.repoId}/refresh`).then(

|

||||

(res) => {

|

||||

if (res.data.status == "ready") {

|

||||

toast.title = `${repo.repoId} is refreshed.`;

|

||||

} else {

|

||||

toast.title = `Refreshing of ${repo.repoId}.`;

|

||||

}

|

||||

},

|

||||

(error) => {

|

||||

toast.title = `Error during the refresh of ${repo.repoId}.`;

|

||||

toast.body = error.body;

|

||||

}

|

||||

);

|

||||

};

|

||||

|

||||

function getRepositories() {

|

||||

$http.get("/api/admin/repos", { params: $scope.query }).then(

|

||||

(res) => {

|

||||

$scope.total = res.data.total;

|

||||

$scope.totalPage = Math.ceil(res.data.total / $scope.query.limit);

|

||||

$scope.repositories = res.data.results;

|

||||

$scope.$apply();

|

||||

},

|

||||

(err) => {

|

||||

console.error(err);

|

||||

@@ -138,7 +172,7 @@ angular

|

||||

|

||||

return false;

|

||||

};

|

||||

|

||||

|

||||

function getUserRepositories(username) {

|

||||

$http.get("/api/admin/users/" + username + "/repos", {}).then(

|

||||

(res) => {

|

||||

@@ -162,6 +196,51 @@ angular

|

||||

getUser($routeParams.username);

|

||||

getUserRepositories($routeParams.username);

|

||||

|

||||

$scope.removeCache = (repo) => {

|

||||

$http.delete("/api/admin/repos/" + repo.repoId).then(

|

||||

(res) => {

|

||||

$scope.$apply();

|

||||

},

|

||||

(err) => {

|

||||

console.error(err);

|

||||

}

|

||||

);

|

||||

};

|

||||

|

||||

$scope.updateRepository = (repo) => {

|

||||

const toast = {

|

||||

title: `Refreshing ${repo.repoId}...`,

|

||||

date: new Date(),

|

||||

body: `The repository ${repo.repoId} is going to be refreshed.`,

|

||||

};

|

||||

$scope.toasts.push(toast);

|

||||

repo.s;

|

||||

|

||||

$http.post(`/api/repo/${repo.repoId}/refresh`).then(

|

||||

(res) => {

|

||||

if (res.data.status == "ready") {

|

||||

toast.title = `${repo.repoId} is refreshed.`;

|

||||

} else {

|

||||

toast.title = `Refreshing of ${repo.repoId}.`;

|

||||

}

|

||||

},

|

||||

(error) => {

|

||||

toast.title = `Error during the refresh of ${repo.repoId}.`;

|

||||

toast.body = error.body;

|

||||

}

|

||||

);

|

||||

};

|

||||

|

||||

$scope.getGitHubRepositories = (force) => {

|

||||

$http

|

||||

.get(`/api/user/${$scope.userInfo.username}/all_repositories`, {

|

||||

params: { force: "1" },

|

||||

})

|

||||

.then((res) => {

|

||||

$scope.userInfo.repositories = res.data;

|

||||

});

|

||||

};

|

||||

|

||||

let timeClear = null;

|

||||

$scope.$watch(

|

||||

"query",

|

||||

@@ -247,6 +326,7 @@ angular

|

||||

(res) => {

|

||||

$scope.downloadJobs = res.data.downloadQueue;

|

||||

$scope.removeJobs = res.data.removeQueue;

|

||||

$scope.removeCaches = res.data.cacheQueue;

|

||||

},

|

||||

(err) => {

|

||||

console.error(err);

|

||||

|

||||

@@ -5,17 +5,9 @@ angular

|

||||

"ui.ace",

|

||||

"ngPDFViewer",

|

||||

"pascalprecht.translate",

|

||||

"angular-google-analytics",

|

||||

"admin",

|

||||

])

|

||||

.config(function (

|

||||

$routeProvider,

|

||||

$locationProvider,

|

||||

$translateProvider,

|

||||

AnalyticsProvider

|

||||

) {

|

||||

AnalyticsProvider.setAccount("UA-5954162-28");

|

||||

|

||||

.config(function ($routeProvider, $locationProvider, $translateProvider) {

|

||||

$translateProvider.useStaticFilesLoader({

|

||||

prefix: "/i18n/locale-",

|

||||

suffix: ".json",

|

||||

@@ -142,7 +134,6 @@ angular

|

||||

});

|

||||

$locationProvider.html5Mode(true);

|

||||

})

|

||||

.run(["Analytics", function (Analytics) {}])

|

||||

.filter("humanFileSize", function () {

|

||||

return function humanFileSize(bytes, si = false, dp = 1) {

|

||||

const thresh = si ? 1000 : 1024;

|

||||

@@ -259,7 +250,7 @@ angular

|

||||

},

|

||||

link: function (scope, elem, attrs) {

|

||||

function update() {

|

||||

elem.html(marked(scope.content, { baseUrl: $location.url() }));

|

||||

elem.html(renderMD(scope.content, $location.url()));

|

||||

}

|

||||

scope.$watch(attrs.terms, update);

|

||||

scope.$watch("terms", update);

|

||||

@@ -415,18 +406,30 @@ angular

|

||||

restrict: "E",

|

||||

scope: { file: "=" },

|

||||

controller: function ($element, $scope, $http) {

|

||||

function renderNotebookJSON(json) {

|

||||

const notebook = nb.parse(json);

|

||||

try {

|

||||

$element.html("");

|

||||

$element.append(notebook.render());

|

||||

Prism.highlightAll();

|

||||

} catch (error) {

|

||||

$element.html("Unable to render the notebook.");

|

||||

}

|

||||

}

|

||||

function render() {

|

||||

if (!$scope.file) return;

|

||||

$http.get($scope.file).then((res) => {

|

||||

var notebook = nb.parse(res.data);

|

||||

if ($scope.$parent.content) {

|

||||

try {

|

||||

var rendered = notebook.render();

|

||||

$element.append(rendered);

|

||||

Prism.highlightAll();

|

||||

renderNotebookJSON(JSON.parse($scope.$parent.content));

|

||||

} catch (error) {

|

||||

$element.html("Unable to render the notebook.");

|

||||

$element.html(

|

||||

"Unable to render the notebook invalid notebook format."

|

||||

);

|

||||

}

|

||||

});

|

||||

} else if ($scope.file) {

|

||||

$http

|

||||

.get($scope.file.download_url)

|

||||

.then((res) => renderNotebookJSON(res.data));

|

||||

}

|

||||

}

|

||||

$scope.$watch("file", (v) => {

|

||||

render();

|

||||

@@ -508,7 +511,6 @@ angular

|

||||

$http.get("/api/user").then(

|

||||

(res) => {

|

||||

if (res) $scope.user = res.data;

|

||||

getQuota();

|

||||

},

|

||||

() => {

|

||||

$scope.user = null;

|

||||

@@ -528,22 +530,6 @@ angular

|

||||

);

|

||||

}

|

||||

getOptions();

|

||||

function getQuota() {

|

||||

$http.get("/api/user/quota").then((res) => {

|

||||

$scope.quota = res.data;

|

||||

$scope.quota.storage.percent = $scope.quota.storage.total

|

||||

? ($scope.quota.storage.used * 100) / $scope.quota.storage.total

|

||||

: 100;

|

||||

$scope.quota.file.percent = $scope.quota.file.total

|

||||

? ($scope.quota.file.used * 100) / $scope.quota.file.total

|

||||

: 100;

|

||||

$scope.quota.repository.percent = $scope.quota.repository.total

|

||||

? ($scope.quota.repository.used * 100) /

|

||||

$scope.quota.repository.total

|

||||

: 100;

|

||||

}, console.error);

|

||||

}

|

||||

getQuota();

|

||||

|

||||

function getMessage() {

|

||||

$http.get("/api/message").then(

|

||||

@@ -703,6 +689,23 @@ angular

|

||||

};

|

||||

$scope.orderBy = "-anonymizeDate";

|

||||

|

||||

function getQuota() {

|

||||

$http.get("/api/user/quota").then((res) => {

|

||||

$scope.quota = res.data;

|

||||

$scope.quota.storage.percent = $scope.quota.storage.total

|

||||

? ($scope.quota.storage.used * 100) / $scope.quota.storage.total

|

||||

: 100;

|

||||

$scope.quota.file.percent = $scope.quota.file.total

|

||||

? ($scope.quota.file.used * 100) / $scope.quota.file.total

|

||||

: 100;

|

||||

$scope.quota.repository.percent = $scope.quota.repository.total

|

||||

? ($scope.quota.repository.used * 100) /

|

||||

$scope.quota.repository.total

|

||||

: 100;

|

||||

}, console.error);

|

||||

}

|

||||

getQuota();

|

||||

|

||||

function getRepositories() {

|

||||

$http.get("/api/user/anonymized_repositories").then(

|

||||

(res) => {

|

||||

@@ -987,9 +990,7 @@ angular

|

||||

$scope.terms = "";

|

||||

$scope.defaultTerms = "";

|

||||

$scope.branches = [];

|

||||

$scope.repositories = [];

|

||||

$scope.source = {

|

||||

type: "GitHubStream",

|

||||

branch: "",

|

||||

commit: "",

|

||||

};

|

||||

@@ -1067,17 +1068,6 @@ angular

|

||||

}

|

||||

});

|

||||

|

||||

$scope.getRepositories = (force) => {

|

||||

$http

|

||||

.get("/api/user/all_repositories", {

|

||||

params: { force: force === true ? "1" : "0" },

|

||||

})

|

||||

.then((res) => {

|

||||

$scope.repositories = res.data;

|

||||

});

|

||||

};

|

||||

$scope.getRepositories();

|

||||

|

||||

$scope.repoSelected = async () => {

|

||||

$scope.terms = $scope.defaultTerms;

|

||||

$scope.repoId = "";

|

||||

@@ -1164,15 +1154,9 @@ angular

|

||||

resetValidity();

|

||||

const res = await $http.get(`/api/repo/${o.owner}/${o.repo}/`);

|

||||

$scope.details = res.data;

|

||||

if ($scope.details.size > $scope.site_options.MAX_REPO_SIZE) {

|

||||

$scope.anonymize.mode.$$element[0].disabled = true;

|

||||

|

||||

$scope.$apply(() => {

|

||||

$scope.source.type = "GitHubStream";

|

||||

checkSourceType();

|

||||

});

|

||||

if (!$scope.repoId) {

|

||||

$scope.repoId = $scope.details.repo + "-" + generateRandomId(4);

|

||||

}

|

||||

$scope.repoId = $scope.details.repo + "-" + generateRandomId(4);

|

||||

await $scope.getBranches();

|

||||

} catch (error) {

|

||||

console.log("here", error);

|

||||

@@ -1327,7 +1311,7 @@ angular

|

||||

}

|

||||

|

||||

$scope.anonymize_readme = content;

|

||||

const html = marked($scope.anonymize_readme);

|

||||

const html = renderMD($scope.anonymize_readme, $location.url());

|

||||

$scope.html_readme = $sce.trustAsHtml(html);

|

||||

setTimeout(Prism.highlightAll, 150);

|

||||

}

|

||||

@@ -1439,7 +1423,7 @@ angular

|

||||

const selected = $scope.branches.filter(

|

||||

(f) => f.name == $scope.source.branch

|

||||

)[0];

|

||||

checkSourceType();

|

||||

checkHasPage();

|

||||

|

||||

if (selected) {

|

||||

$scope.source.commit = selected.commit;

|

||||

@@ -1450,22 +1434,15 @@ angular

|

||||

}

|

||||

});

|

||||

|

||||

function checkSourceType() {

|

||||

if ($scope.source.type == "GitHubStream") {

|

||||

$scope.options.page = false;

|

||||

//$scope.anonymize.page.$$element[0].disabled = true;

|

||||

} else {

|

||||

if ($scope.details && $scope.details.hasPage) {

|

||||

$scope.anonymize.page.$$element[0].disabled = false;

|

||||

if ($scope.details.pageSource.branch != $scope.source.branch) {

|

||||

$scope.anonymize.page.$$element[0].disabled = true;

|

||||

}

|

||||

function checkHasPage() {

|

||||

if ($scope.details && $scope.details.hasPage) {

|

||||

$scope.anonymize.page.$$element[0].disabled = false;

|

||||

if ($scope.details.pageSource.branch != $scope.source.branch) {

|

||||

$scope.anonymize.page.$$element[0].disabled = true;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

$scope.$watch("source.type", checkSourceType);

|

||||

|

||||

$scope.$watch("terms", anonymize);

|

||||

$scope.$watch("options.image", anonymize);

|

||||

$scope.$watch("options.link", anonymize);

|

||||

@@ -1502,13 +1479,26 @@ angular

|

||||

"heif",

|

||||

"heic",

|

||||

];

|

||||

const mediaFiles = [

|

||||

"wav",

|

||||

"mp3",

|

||||

"ogg",

|

||||

"mp4",

|

||||

"avi",

|

||||

"webm",

|

||||

"mov",

|

||||

"mpg",

|

||||

"wma",

|

||||

];

|

||||

|

||||

$scope.$on("$routeUpdate", function (event, current) {

|

||||

if (($routeParams.path || "") == $scope.filePath) {

|

||||

return;

|

||||

}

|

||||

$scope.filePath = $routeParams.path || "";

|

||||

$scope.paths = $scope.filePath.split("/");

|

||||

$scope.paths = $scope.filePath

|

||||

.split("/")

|

||||

.filter((f) => f && f.trim().length > 0);

|

||||

|

||||

if ($scope.repoId != $routeParams.repoId) {

|

||||

return init();

|

||||

@@ -1517,45 +1507,55 @@ angular

|

||||

updateContent();

|

||||

});

|

||||

|

||||

function selectFile() {

|

||||

const readmePriority = [

|

||||

"readme.md",

|

||||

"readme.txt",

|

||||

"readme.org",

|

||||

"readme.1st",

|

||||

"readme",

|

||||

];

|

||||

// find current folder

|

||||

let currentFolder = $scope.files;

|

||||

for (const p of $scope.paths) {

|

||||

if (currentFolder[p]) {

|

||||

currentFolder = currentFolder[p];

|

||||

}

|

||||

}

|

||||

if (currentFolder.size && Number.isInteger(currentFolder.size)) {

|

||||

// a file is already selected

|

||||

return;

|

||||

}

|

||||

const readmeCandidates = {};

|

||||

for (const file in currentFolder) {

|

||||

if (file.toLowerCase().indexOf("readme") > -1) {

|

||||

readmeCandidates[file.toLowerCase()] = file;

|

||||

}

|

||||

}

|

||||

let best_match = null;

|

||||

for (const p of readmePriority) {

|

||||

if (readmeCandidates[p]) {

|

||||

best_match = p;

|

||||

break;

|

||||

}

|

||||

}

|

||||

if (!best_match && Object.keys(readmeCandidates).length > 0)

|

||||

best_match = Object.keys(readmeCandidates)[0];

|

||||

if (best_match) {

|

||||

let uri = $location.url();

|

||||

if (uri[uri.length - 1] != "/") {

|

||||

uri += "/";

|

||||

}

|

||||

|

||||

// redirect to readme

|

||||

$location.url(uri + readmeCandidates[best_match]);

|

||||

}

|

||||

}

|

||||

function getFiles(callback) {

|

||||

$http.get(`/api/repo/${$scope.repoId}/files/`).then(

|

||||

(res) => {

|

||||

$scope.files = res.data;

|

||||

if ($scope.paths.length == 0 || $scope.paths[0] == "") {

|

||||

// redirect to readme

|

||||

const readmeCandidates = {};

|

||||

for (const file in $scope.files) {

|

||||

if (file.toLowerCase().indexOf("readme") > -1) {

|

||||

readmeCandidates[file.toLowerCase()] = file;

|

||||

}

|

||||

}

|

||||

|

||||

const readmePriority = [

|

||||

"readme.md",

|

||||

"readme.txt",

|

||||

"readme.org",

|

||||

"readme.1st",

|

||||

"readme",

|

||||

];

|

||||

let best_match = null;

|

||||

for (const p of readmePriority) {

|

||||

if (readmeCandidates[p]) {

|

||||

best_match = p;

|

||||

break;

|

||||

}

|

||||

}

|

||||

if (!best_match && Object.keys(readmeCandidates).length > 0)

|

||||

best_match = Object.keys(readmeCandidates)[0];

|

||||

if (best_match) {

|

||||

let uri = $location.url();

|

||||

if (uri[uri.length - 1] != "/") {

|

||||

uri += "/";

|

||||

}

|

||||

|

||||

// redirect to readme

|

||||

$location.url(uri + readmeCandidates[best_match]);

|

||||

}

|

||||

}

|

||||

selectFile();

|

||||

if (callback) {

|

||||

return callback();

|

||||

}

|

||||

@@ -1615,6 +1615,9 @@ angular

|

||||

if (imageFiles.indexOf(extension) > -1) {

|

||||

return "image";

|

||||

}

|

||||

if (mediaFiles.indexOf(extension) > -1) {

|

||||

return "media";

|

||||

}

|

||||

return "code";

|

||||

}

|

||||

|

||||

@@ -1642,9 +1645,8 @@ angular

|

||||

}

|

||||

|

||||

if ($scope.type == "md") {

|

||||

const md = contentAbs2Relative(res.data);

|

||||

$scope.content = $sce.trustAsHtml(

|

||||

marked(md, { baseUrl: $location.url() })

|

||||

renderMD(res.data, $location.url())

|

||||

);

|

||||

$scope.type = "html";

|

||||

}

|

||||

@@ -1668,13 +1670,22 @@ angular

|

||||

},

|

||||

(err) => {

|

||||

$scope.type = "error";

|

||||

$scope.content = "unknown_error";

|

||||

try {

|

||||

err.data = JSON.parse(err.data);

|

||||

} catch (ignore) {}

|

||||

if (err.data.error) {

|

||||

$scope.content = err.data.error;

|

||||

} else {

|

||||

$scope.content = err.data;

|

||||

if (err.data.error) {

|

||||

$scope.content = err.data.error;

|

||||

} else {

|

||||

$scope.content = err.data;

|

||||

}

|

||||

} catch (ignore) {

|

||||

console.log(err);

|

||||

if (err.status == -1) {

|

||||

$scope.content = "request_error";

|

||||

} else if (err.status == 502) {

|

||||

// cloudflare error

|

||||

$scope.content = "unreachable";

|

||||

}

|

||||

}

|

||||

}

|

||||

);

|

||||

|

||||

9

public/script/external/ana.min.js

vendored

9

public/script/external/ana.min.js

vendored

File diff suppressed because one or more lines are too long

1879

public/script/external/github-emojis.js

vendored

Normal file

1879

public/script/external/github-emojis.js

vendored

Normal file

File diff suppressed because it is too large

Load Diff

57

public/script/external/marked-emoji.js

vendored

Normal file

57

public/script/external/marked-emoji.js

vendored

Normal file

@@ -0,0 +1,57 @@

|

||||

const defaultOptions = {

|

||||

// emojis: {}, required

|

||||

unicode: false,

|

||||

};

|

||||

|

||||